Related video: https://youtu.be/Vj5TRu4xdfo

Children instinctively prefer to move somewhere embracing and riding on something. Electric toy cars for children are recently prevalent, but vehicle technology for children has not been introduced yet. Since the structures of conventional automobiles are directly reflected even in toy cars, children should learn how to operate a steering wheel, a pedal, and gearshift to control speed or direction of the car.

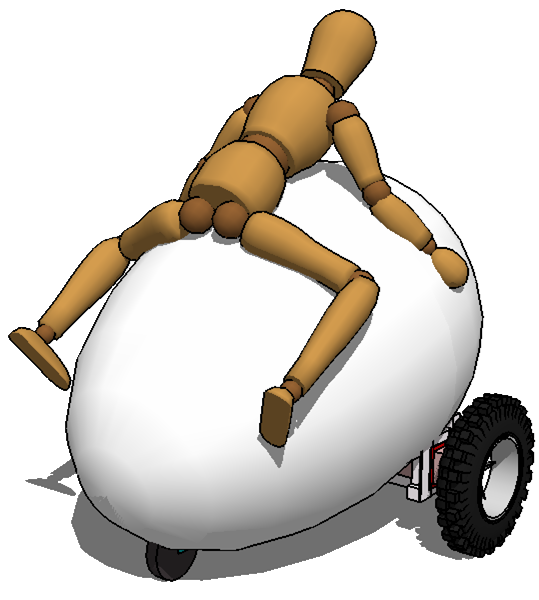

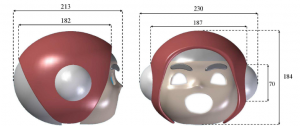

What structure of mobility is capable for young children to drive easy and fun? How can we have kids feel a car as a plump and soft life not solid and hard stuff? These questions are the starting point to design a new concept in personal mobility.

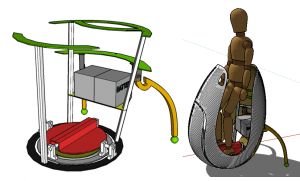

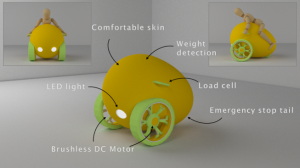

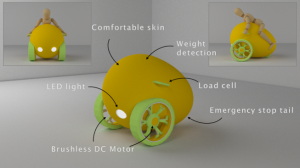

Based on our motivation, we suggest egg shaped personal mobility named “EggRan.” We focused on children’s instinctive behavior to hug while riding on something. For this, we designed a sensing mechanism which can detect movements of a child’s body. We have also tried to include a sense of comfort and warmth on EggRan. At the same time, we have made sure that the vehicle is durable. Since the EggRan requires only hands for its control, it can be operated by disabled people who cannot use their legs when they want to move to other places.

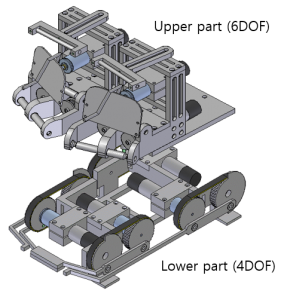

Two electrical motors are installed for the wheels, and a load cell is installed at each handle of the EggRan to sense body movements. The force induced by children’s body movements is transmitted to the handles through arms. The load cell can detect the amount of the force. The direction and velocity can be determined by the difference of forces to each handle and the child’s weight. For instance, when a child tilts his or her body to the left, greater pulling force is transmitted to the right handle, which causes the EggRan to turn left. When a child pulls or pushes the handles with the same force, the EggRan goes forward or backward, respectively. The resulting speed of the vehicle depends on the pulling or pushing force and the child’s weight.

The emergency stop function is implemented by a magnetic contact switch which is placed beneath the EggRan. The magnetic switch is connected to a long line which looks like a tail. If we pull out the long line and detach it from the EggRan’s body, the whole control system will stop. The vehicle can come to a complete stop or restart by just pulling or attaching the ‘tail.’

The EggRan is a novel designed personal mobility that children can control easily. Its shape and surface is strong enough to enjoy by themselves. For this reason, it can be a kind of new ride at some places like below: Commerce facilities for kids (Amusement park, Theme park), Rehabilitation facilities for people who have low-part disorder.

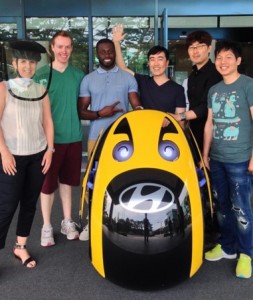

We presented the EggRan at the Busan Motor Show 2012. After that, the EggRan was displayed and demonstrated at many events and exhibitions. When we evaluated our real product to kids and women at various events, we found they are interested in our cute design and easy operating system.